Webinar: Addressing Concerns About AI in Law

AI in Law: Your Top 8 Concerns, Addressed (Webinar)

Will generative AI take my job in law?

The real question is… whose job? And is it a job a trained lawyer (or human generally) should be doing? “To be blunt, there are some jobs – or I should say some portion of our jobs – we want technology to take so that we can do the rest of our jobs better and more efficiently.” (Umair Mahajir)

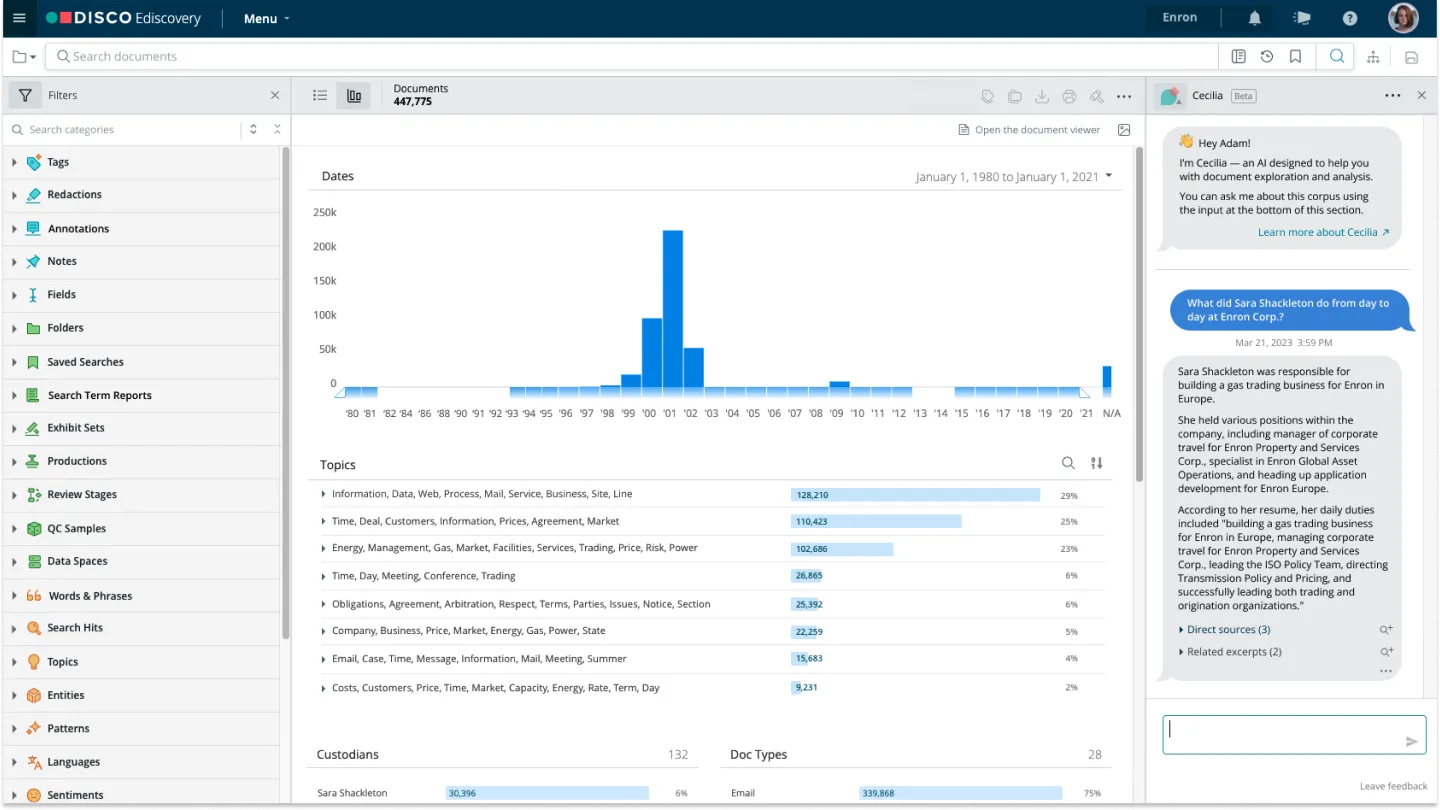

With new legal technology that can create a timeline in minutes or surface hot docs with a simple chat interface, we’re leaving behind the days of junior associates pulling all-nighters organizing papers on the conference room table – or spending months combing through emails.

What about generative AI hallucinations and the law?

What’s a hallucination in generative AI? Here’s a quick guide: Language-generating AI can produce correct answers – or fabricate incorrect ones. These incorrect answers are called “hallucinations.”

So how much should legal practitioners worry about hallucinations?

“This is not actually a concern about the technology. This is a concern about professional standards, process and workflow…. As lawyers, we always need to make sure we are validating conclusions.” (Umair Mahajir)

In conclusion: Be smart and do your homework. Validate the information you receive from generative AI just as you would information you receive from any other source.

…plus six more key concerns about generative AI and law

Check out our on-demand webinar to unpack six other concerns about AI adoption, lawyer-client confidentiality, and more.

Inspired to see how generative AI can transform your legal practice? Check out our webinar on DISCO’s revolutionary Cecilia AI suite – or, get a product demo.

Webinar: Addressing Concerns About AI in Law

AI in Law: Your Top 8 Concerns, Addressed (Webinar)

Will generative AI take my job in law?

The real question is… whose job? And is it a job a trained lawyer (or human generally) should be doing? “To be blunt, there are some jobs – or I should say some portion of our jobs – we want technology to take so that we can do the rest of our jobs better and more efficiently.” (Umair Mahajir)

With new legal technology that can create a timeline in minutes or surface hot docs with a simple chat interface, we’re leaving behind the days of junior associates pulling all-nighters organizing papers on the conference room table – or spending months combing through emails.

What about generative AI hallucinations and the law?

What’s a hallucination in generative AI? Here’s a quick guide: Language-generating AI can produce correct answers – or fabricate incorrect ones. These incorrect answers are called “hallucinations.”

So how much should legal practitioners worry about hallucinations?

“This is not actually a concern about the technology. This is a concern about professional standards, process and workflow…. As lawyers, we always need to make sure we are validating conclusions.” (Umair Mahajir)

In conclusion: Be smart and do your homework. Validate the information you receive from generative AI just as you would information you receive from any other source.

…plus six more key concerns about generative AI and law

Check out our on-demand webinar to unpack six other concerns about AI adoption, lawyer-client confidentiality, and more.

Inspired to see how generative AI can transform your legal practice? Check out our webinar on DISCO’s revolutionary Cecilia AI suite – or, get a product demo.

Will generative AI take my job in law?

The real question is… whose job? And is it a job a trained lawyer (or human generally) should be doing? “To be blunt, there are some jobs – or I should say some portion of our jobs – we want technology to take so that we can do the rest of our jobs better and more efficiently.” (Umair Mahajir)

With new legal technology that can create a timeline in minutes or surface hot docs with a simple chat interface, we’re leaving behind the days of junior associates pulling all-nighters organizing papers on the conference room table – or spending months combing through emails.

What about generative AI hallucinations and the law?

What’s a hallucination in generative AI? Here’s a quick guide: Language-generating AI can produce correct answers – or fabricate incorrect ones. These incorrect answers are called “hallucinations.”

So how much should legal practitioners worry about hallucinations?

“This is not actually a concern about the technology. This is a concern about professional standards, process and workflow…. As lawyers, we always need to make sure we are validating conclusions.” (Umair Mahajir)

In conclusion: Be smart and do your homework. Validate the information you receive from generative AI just as you would information you receive from any other source.

…plus six more key concerns about generative AI and law

Check out our on-demand webinar to unpack six other concerns about AI adoption, lawyer-client confidentiality, and more.

Inspired to see how generative AI can transform your legal practice? Check out our webinar on DISCO’s revolutionary Cecilia AI suite – or, get a product demo.

%20(1).jpeg)